Overview

Still wondering which file transfer protocol is right for your business? Here’s a dozen you can choose from. We’ve also added some brief descriptions to make your choice easier.

1. FTP (File Transfer Protocol)

When it comes to business file transfers, FTP is probably the first that comes to mind. FTP is built for both single file and bulk file transfers. It’s been around for quite some time, so you likely won’t have problems with interoperability. Meaning, there’ll always be a good chance your trading partner will be able to exchange information through it. You won’t have trouble finding a client application for your end users either.

The downside is, this file transfer protocol is not so strong on security. Hence, if you need to comply with data security/privacy laws and regulations like HIPAA, PCI-DSS, SOX, GLBA, and EU Data Protection Directive, stay away from it. Choose FTP if your business is NOT or does NOT:

- Operate in a highly regulated industry like healthcare, finance, or manufacturing;

- Send/receive sensitive files; or

- Publicly traded (hence governed by SOX).

Another problem with FTP is its susceptibility to firewall issues, which can adversely affect client connectivity. Read Active v.s. Passive FTP Simplified to understand the problem and learn how to resolve it.

2. HTTP (Hypertext Transfer Protocol)

Like FTP, HTTP is a widely used protocol. It’s easy to implement, especially for person-to-server and person-to-person file transfers (read Exploring Use Cases for Managed File Transfer for reference). Users only need a Web browser like Chrome, Firefox, Internet Explorer, or Safari, and they’ll be ready to go. No installation needed on the client side.

HTTP is also less prone to firewall issues (unlike FTP). However, like FTP, HTTP by itself is inherently insecure and incapable of meeting regulatory compliance or securing data. Use HTTP if (lack of) security is not an issue for you.

Recommended post: How to Set Up a Web File Transfer

3. FTPS (FTP over SSL)

The good news is that both FTP and HTTP now have secure versions. FTP has FTPS, while HTTP has HTTPS. Both are protected through SSL. If you use FTPS, you retain the benefits of FTP but gain the security features that come with SSL, including data-in-motion encryption as well as server and client authentication. Because FTPS is based on FTP, you’ll still be subjected to the same firewall issues that come with FTP.

Organizations in the Legal, Government, and Financial Services industry might want to consider FTPS as an option.

Recommended post: Securing Trading Partner File Transfers w/ Auto PGP Encryption & FTPS

4. HTTPS (HTTP over SSL)

As mentioned earlier, HTTPS is the secure version of HTTP. If you don’t like having to install client applications for your end users and most of your end users are non-technical folks, this might be the perfect choice. It’s secure and very user-friendly compared to FTP/S.

Recommended post: How To Set Up A HTTPS File Transfer

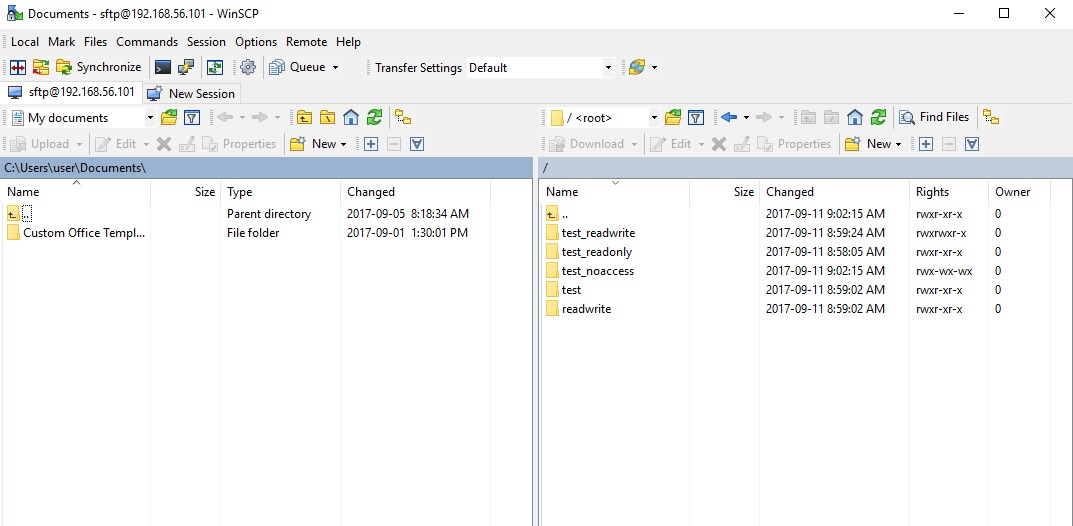

5. SFTP (SSH File Transfer Protocol)

Here’s another widely used file transfer protocol that’s perfect for businesses who require privacy/security capabilities. SFTP runs on SSH, a secure protocol that – like SSL – supports data-in-motion encryption and client/server authentication. The main advantage of SFTP over FTPS (which is usually compared to it) is that it’s more firewall-friendly.

Recommended post: Business Benefits Of An SFTP Server

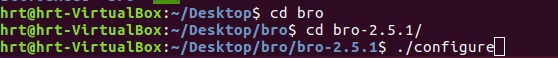

6. SCP (Secure Copy)

This is an older, more primitive version of SFTP. It also runs on SSH, so it comes with the same security features. However, if you’re using a recent version of SSH, you’ll already have access to both SCP and SFTP. Since SFTP has more functionality, I would recommend it over SCP. The only instance you’ll probably need SCP is if you’ll be exchanging files with a company who only has a legacy SSH server.

Recommended post: Various Linux SCP Examples To Get You Started With Using Secure Copy

7. WebDAV (Web Distributed Authoring and Versioning)

Most of the file transfer protocols we’ve discussed so far are primarily used for file transfers. Here’s one that can do more than just facilitate file transfers. WebDAV, which actually runs over HTTP, is mainly designed for collaboration activities. Through WebDAV, users won’t just be able to exchange files. They’ll also be able to collaborate over a single file even if they’re (the users) working from different locations. WebDAV is probably best suited for organizations who need distributed authoring capabilities, e.g. universities and research institutions.

8. WebDAVS

By now, you should be able to guess what the S stands for. That’s right WebDAVS is a secure version of WebDAV. If WebDAV runs over HTTP, WebDAVS runs over HTTPS. That means, it exhibits the same characteristics of WebDAV, plus the secure features of SSL.

9. TFTP (Trivial File Transfer Protocol)

This file transfer protocol is different from the rest in that you won’t be using it for exchanging documents, images, or spreadsheets. In fact, you nornally won’t be using this for exchanging files with machines outside of your network. TFTP is better suited for network management tasks like network booting, backing up configuration files, and installing operating systems over a network. Why did we include it here? Well, it is a file transfer protocol and you certainly can use it in your business (albeit internally).

If you want to learn more about TFTP, the article What Is TFTP? would be a good place to start.

10. AS2 (Applicability Statement 2)

Although nearly all of the protocols discussed earlier are capable of supporting B2B exchanges, there are a few protocols that are really designed specifically for such tasks. One of them is AS2.

AS2 is built for EDI (Electronic Data Interchange) transactions, the automated information exchanges normally seen in the manufacturing and retail industries. EDI is now also used in healthcare, as a result of the HIPAA legislation (read Securing HIPAA EDI Transactions with AS2). If you operate in these industries or need to carry out EDI transactions, AS2 is an excellent choice.

Recommended post: You Know It’s Time To Implement Server To Server File Transfer When..

11. OFTP (Odette File Transfer Protocol)

Another file transfer protocol specifically designed for EDI is OFTP. OFTP is quite common in Europe, so if you transact with companies there, you might need this. Both OFTP and AS2 are inherently secure and even support electronic delivery receipts (read What Is An AS2 MDN?), making them perfect for B2B transactions.

12. AFTP (Accelerated File Transfer Protocol)

WAN file transfers, especially those carried out over great distances, are easily affected by poor network conditions like latency and packet loss, which result in considerably degraded throughputs. AFTP is a TCP-UDP hybrid that makes file transfers virtually immune to these network conditions. If you want to see the big difference AFTP makes, read the post Accelerated File Transfer In Action.

For a detailed explanation on the effects of latency and packet loss and how AFTP makes them virtually negligible, download the white paper How to Boost File Transfer Speeds 100x Without Increasing Your Bandwidth.

Companies in the Film and Manufacturing industries would find this protocol very useful.